‘Most harmful’ AI revealed in new safety study

None of the eight leading AI firms scored well when evaluated for existential risk

The majority of artificial intelligence companies are failing to manage the catastrophic risks that come with the technology, according to a new study.

The assessment by AI safety experts at the nonprofit Future of Life Institute found that the eight leading AI firms “lack the concrete safeguards, independent oversight and credible long-term risk-management strategies that such powerful systems demand.”

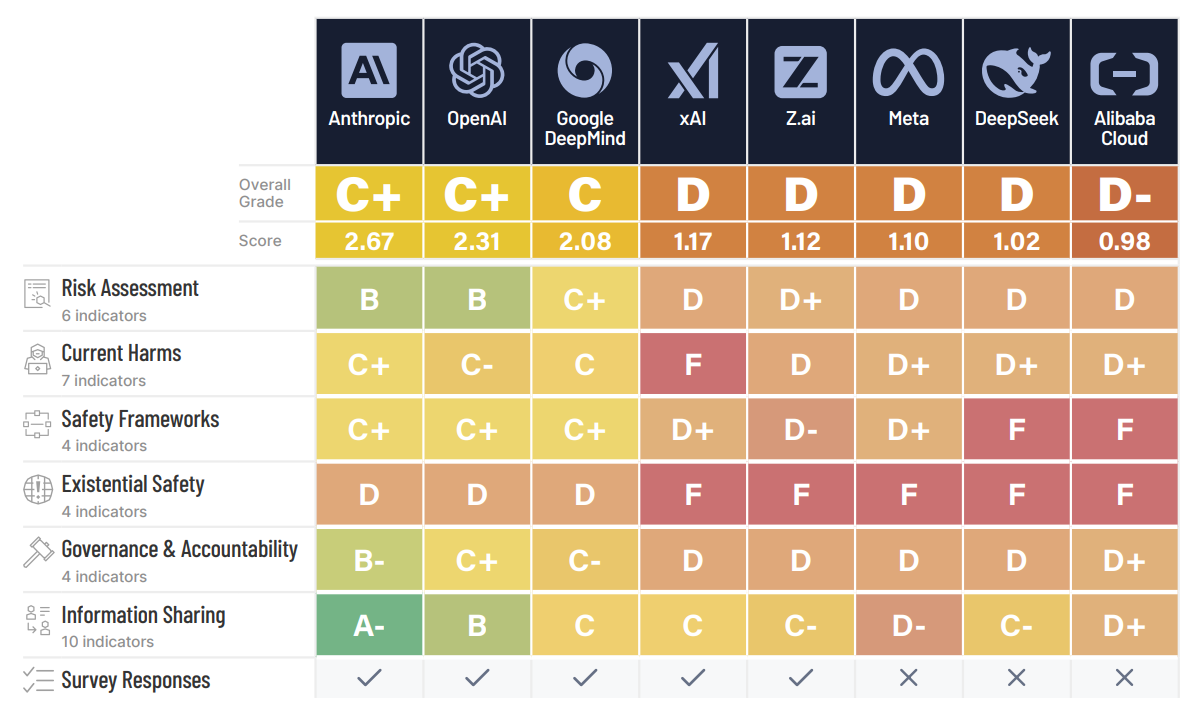

US companies scored the best marks in the AI Safety Index, with Anthropic ahead of ChatGPT creator OpenAI and Google DeepMind. Chinese companies recorded the lowest overall grades, with Alibaba Cloud coming just behind DeepSeek.

No company scored above a D when evaluated for existential risk, while Alibaba Cloud, DeepSeek, Meta, xAI and Z.ai all scored an F.

“Existential safety remains the sector’s core structural failure, making the widening gap between accelerating AGI/ superintelligence ambitions and the absence of credible control plans increasingly alarming,” the study noted.

“While companies accelerate their AGI and superintelligence ambitions, none has demonstrated a credible plan for preventing catastrophic misuse or loss of control.”

The report’s authors called for AI companies to be more transparent with their own safety assessments, and to make more effort to protect users from more immediate harms like AI psychosis.

“AI CEOs claim they know how to build superhuman AI, yet none can show how they’ll prevent us from losing control – after which humanity’s survival is no longer in our hands,” said Stuart Russell, a professor of computer science at UC Berkeley.

“I’m looking for proof that they can reduce the annual risk of control loss to one in a hundred million, in line with nuclear reactor requirements. Instead, they admit the risk could be one in 10, one in five, even one in three, and they can neither justify nor improve those numbers.”

A representative for OpenAI said the company was working with independent experts to “build strong safeguards into our systems, and rigorously test our models”.

A Google spokesperson said: “Our Frontier Safety Framework outlines specific protocols for identifying and mitigating severe risks from powerful frontier AI models before they manifest.

“As our models become more advanced, we continue to innovate on safety and governance at pace with capabilities.”

The Independent has reached out for comment from Alibaba Cloud, Anthropic, DeepSeek, xAI and Z.ai.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments

Bookmark popover

Removed from bookmarks