How easy would it be to snuff out humanity this century?

Astronomer Royal Martin Rees considers the events – manmade or natural – that could trigger the end

Make no mistake, Covid-19 should not have struck us so unawares. Why were even rich countries so unprepared? It’s because politicians and the public have a local focus. They downplay the long term and the global. They ignore Nate Silver’s maxim: “The unfamiliar is not the same as the improbable.”

Indeed, we’re in denial about a whole raft of newly emergent threats to our interconnected world that could be devastating.

Some, like climate change and environmental degradation, are caused by humanity’s ever heavier collective footprint. We know them well but we fail to prioritise countermeasures because their worst impact stretches beyond the time-horizon of political and investment decisions. It’s like the proverbial boiling frog – contented in a warming tank until it’s too late to save itself.

Another class of threat – global pandemics and massive cyber attacks, for instance – is immediately destructive and could happen at any time. The worst of them could be so devastating that one occurrence is too many. And their probability and potential severity is increasing.

Read More:

Some natural events – earthquakes, asteroids and solar flares – have an unchanging annual probability, unaffected by humans, but their economic and human costs get greater as populations grow and global infrastructure becomes more valuable and vulnerable. So we need to worry about them more than our predecessors did.

Indeed, I fear we are guaranteed a bumpy ride through this century. Covid-19 must be a wake-up call, reminding us – and our governments – that we’re vulnerable.

First, a flashback. The most extreme threat for my now-elderly generation, when we were young, was nuclear annihilation. President Kennedy put the odds against a nuclear war over Cuba as between one in three and evens. Robert MacNamara, his defence secretary, said when older and wiser that “we were lucky”. And we’ve learnt of various false alarms and lucky escapes from accidental war during the 1970s and 1980s.

Many would assert that nuclear deterrence worked. In a sense, it did. But that doesn’t mean it was a wise policy. If you play Russian roulette with one or two bullets in the cylinder, you are more likely to survive than not, but the stakes would need to be astonishingly high – or the value you place on your life inordinately low – for this to be a wise gamble.

We were dragooned into just such a gamble throughout the Cold War era. For my part, I would not have chosen to risk a one in three – or even a one in six – chance of a catastrophe that would have killed hundreds of millions and shattered the historic fabric of all European cities, even if the alternative were certain Soviet dominance of Western Europe. And, of course, the devastating consequences of thermo-nuclear war would have spread far beyond the countries that faced a direct threat, especially if a “nuclear winter” were triggered.

Nuclear war still looms over us: the only consolation is that there are about five times fewer weapons, and on lower alert, than the US and Russia deployed during the Cold War. However, there are now nine nuclear powers, and a higher chance than ever before that smaller nuclear arsenals might be used regionally, or even by terrorists. And studies by some of my Cambridge colleagues show that command and control systems are getting more vulnerable to cyberthreats. Moreover, we can’t rule out, later in the century, a standoff between new superpowers that could be handled less well (or less luckily) than the Cuba crisis was.

Nuclear weapons are 20th-century technology. But this century has brought surges in new technologies: bio, cyber and AI, whose implications I shall focus on.

And the backdrop to our present concerns is a world where humanity’s collective footprint is getting heavier. There are now twice as many of us as in the 1960s, about 7.8 billion, all more demanding of energy and resources.

The number of births per year, worldwide, peaked a few years ago and is going down in most countries. Nonetheless, world population is forecast to rise to around 9 billion by 2050. That’s partly because most people in the developing world are young. They are yet to have children, and they will live longer. And partly because the demographic transition hasn’t happened in, for instance, sub-Saharan Africa.

Despite doom-laden forecasts by the Club of Rome and Paul Erlich, food production has kept pace with rising population; famines still occur but they’re due to conflict or maldistribution, not overall scarcity. To feed 9 billion in 2050 will require further-improved agriculture – low-till, water-conserving, and GM crops – and maybe dietary innovations: converting insects into palatable food, and making artificial meat.

To quote Gandhi – enough for everyone’s need but not for everyone’s greed.

Projections beyond 2050 are uncertain. It’s not even clear whether there’ll be a global rise or fall. If, for whatever reason, families in Africa remain large then, according to the UN, that continent’s population could double again by 2100, to 4 billion, thereby raising the global population to 11 billion.

Optimists say that each extra mouth brings two hands and a brain. But it’s the geopolitical stresses – the inequalities between countries as well as within countries – that are most worrying. As compared to the fatalism of earlier generations, those in poor countries now know, via the internet, what they’re missing. And migration is easier. It’s a portent for disaffection and instability. Wealthy nations, especially those in Europe, should urgently promote growing prosperity in Africa, and not just for altruistic reasons.

And another thing: if humanity’s collective impact on land use and climate pushes too hard, the resultant “ecological shock” could irreversibly impoverish our biosphere. Extinction rates are rising; we’re destroying the book of life before we’ve read it.

Biodiversity is crucial to human wellbeing. But preserving the richness of our biosphere has value in its own right, over and above what it means to us humans. To quote the great Harvard ecologist EO Wilson, “mass extinction is the sin that future generations will least forgive us for”.

Professor Partha Dasgupta recently completed a report on the economics of biodiversity for the UK government, which hopefully will be as influential as Nick Stern’s report on climate back in 2006.

So the world’s getting more crowded. And there’s another firm prediction: it will get warmer. In contrast to population issues, climate change is certainly not under-discussed, though it is under-responded-to. The fifth IPCC report presented a spread of projections for different assumptions about future rates of fossil fuel use (and associated rises in CO2 concentration). The need for urgent action was highlighted in the update published in 2018.

Under “business as usual” scenarios we can’t rule out really catastrophic warming and tipping points triggering long-term trends such as the melting of Greenland’s icecap.

But even those who accept there’s a significant risk of climate catastrophe a century from now, differ in how urgently they advocate action today. These divergences stem from differences in economics and ethics and, in particular, in how much obligation we should feel towards future generations – whether we discount long-term concerns or whether we pay an insurance premium to reduce the risk to future generations.

But – to insert a bit of good cheer – there’s a win-win roadmap to a low-carbon future. Nations should accelerate R&D into all forms of low-carbon energy generation; and into other technologies where parallel progress is crucial, especially storage (batteries, compressed air, pumped storage, flywheels, etc) and smart grids.

The faster these clean technologies advance, the sooner will their prices fall so they become affordable to, for instance, India, where more generating capacity will be needed, where the health of the poor is jeopardised by smoky stoves burning wood or dung, and where there would otherwise be pressure to build coal-fired power stations.

We should be evangelists for new technology, not luddites. Without it the world can’t provide sustainable energy, nourishing food and good health for an expanding and more demanding population. But many are anxious that it’s advancing so fast that we may not properly cope with it – and that its misuse can give us a bumpy ride this century.

Advances in microbiology – diagnostics, vaccines and antibiotics – offer prospects of containing natural pandemics. But the same research raises (and it’s my number-one fear) the prospect of engineered pandemics.

For instance, in 2012 groups in Wisconsin and the Netherlands showed that it was surprisingly easy to make the influenza virus more virulent and more transmissible – to some, this was a scary portent of things to come. Such “gain of function” experiments can be done in principle for coronaviruses.

The new Crispr technique for gene-editing is hugely promising, but there are ethical concerns about experiments on human embryos, for instance. And worries about possible runaway consequences of “gene drive” programmes to wipe out species as diverse as mosquitos or grey squirrels.

If it becomes possible for biohackers to ‘play God on a kitchen table’, our ecology (and even our species) may not survive unscathed

Regulation of biotech is needed. But I’d worry that whatever regulations are imposed, on prudential or ethical grounds, can’t be enforced worldwide – any more than the drug laws can – or the tax laws. Whatever can be done will be done by someone, somewhere.

And that’s a nightmare. Whereas an atomic bomb can’t be built without large-scale special-purpose facilities, biotech involves small-scale dual-use equipment.

The global village will have its village idiots and their idiocies can cascade globally. The rising empowerment of tech-savvy groups by biotech – and by cyber tech – will pose an intractable challenge to governments and aggravate the tension between freedom and privacy, and security.

These concerns are relatively near-term – within 10 or 15 years. What about 2050 and beyond?

On the bio front we might expect two things. A better understanding of the combination of genes that determine key human characteristics; and the ability to synthesis genomes that match these features. If it becomes possible for biohackers to “play God on a kitchen table”, our ecology (and even our species) may not survive unscathed.

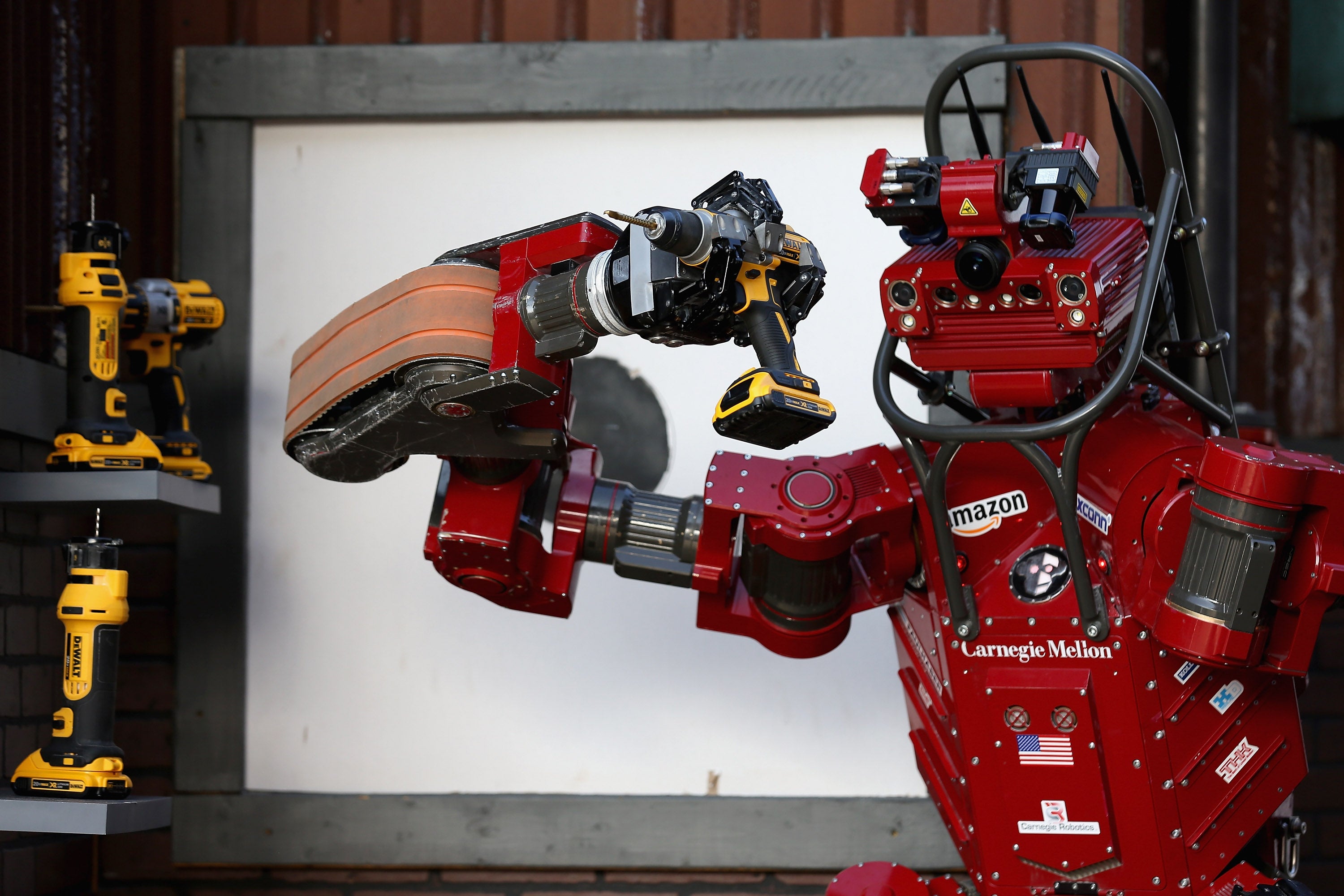

And what about another transformative technology: robotics and artificial intelligence (AI)? Deep Mind’s Alpha Go Zero computer famously beat human champions in the games of Go and Chess. It was given just the rules and “trained” by playing against itself over and over again, for just a few hours.

Already AI can cope better than humans with complex fast-changing networks – traffic flow, or electric grids. The Chinese could have an efficient planned economy that Marx or Stalin could only dream of. And it can help science too: protein folding, drug development, and perhaps even settle whether string theory can really describe our universe.

The ‘arms race’ between cybercriminals and those trying to defend against them will become still more expensive and vexatious

The societal implications of AI are already ambivalent. The incipient shifts in the nature of work have been addressed in several excellent books by economists and social scientists. AI systems will become more intrusive and pervasive. Records of all our movements, our health, and our financial transactions will be in the cloud, managed by a multinational quasi-monopoly.

If we’re sentenced to a term in prison, recommended for surgery, or even given a poor credit rating, we would expect the reasons to be accessible to us – and contestable by us. If such decisions were delegated to algorithms, we would be entitled to feel uneasy, even if presented with compelling evidence that, on average, machines make better decisions than the humans they have usurped.

The “arms race” between cybercriminals and those trying to defend against them will become still more expensive and vexatious. Many experts think that AI, like synthetic biotech, already needs guidelines for “responsible innovation”. Again CSER and its sister institutions have produced important reports. Ethical tensions are already emerging when AI moves from the research phase to being a potentially massive money-spinner for global companies.

It’s the speed of computers that allows them to learn on big training sets. But, as Stuart Russell in particular has reminded us, learning about human behaviour won’t be so easy for them because it involves observing real people in real homes or workplaces. A machine would be sensorily deprived by the slowness of real life – it’s like watching how trees grow is for us.

And robots are still clumsier than a child in moving pieces on a real chessboard. They can’t jump from tree to tree like a squirrel. But sensor technology is advancing fast. We don’t know how fast. And this leads to a digression: it’s always harder to forecast the speed of technological advances than their direction. Sometimes there’s a spell of exponential progress – like the spread of IT and smartphones in the past decade. But in the longer-term it’s like a sigmoid curve.

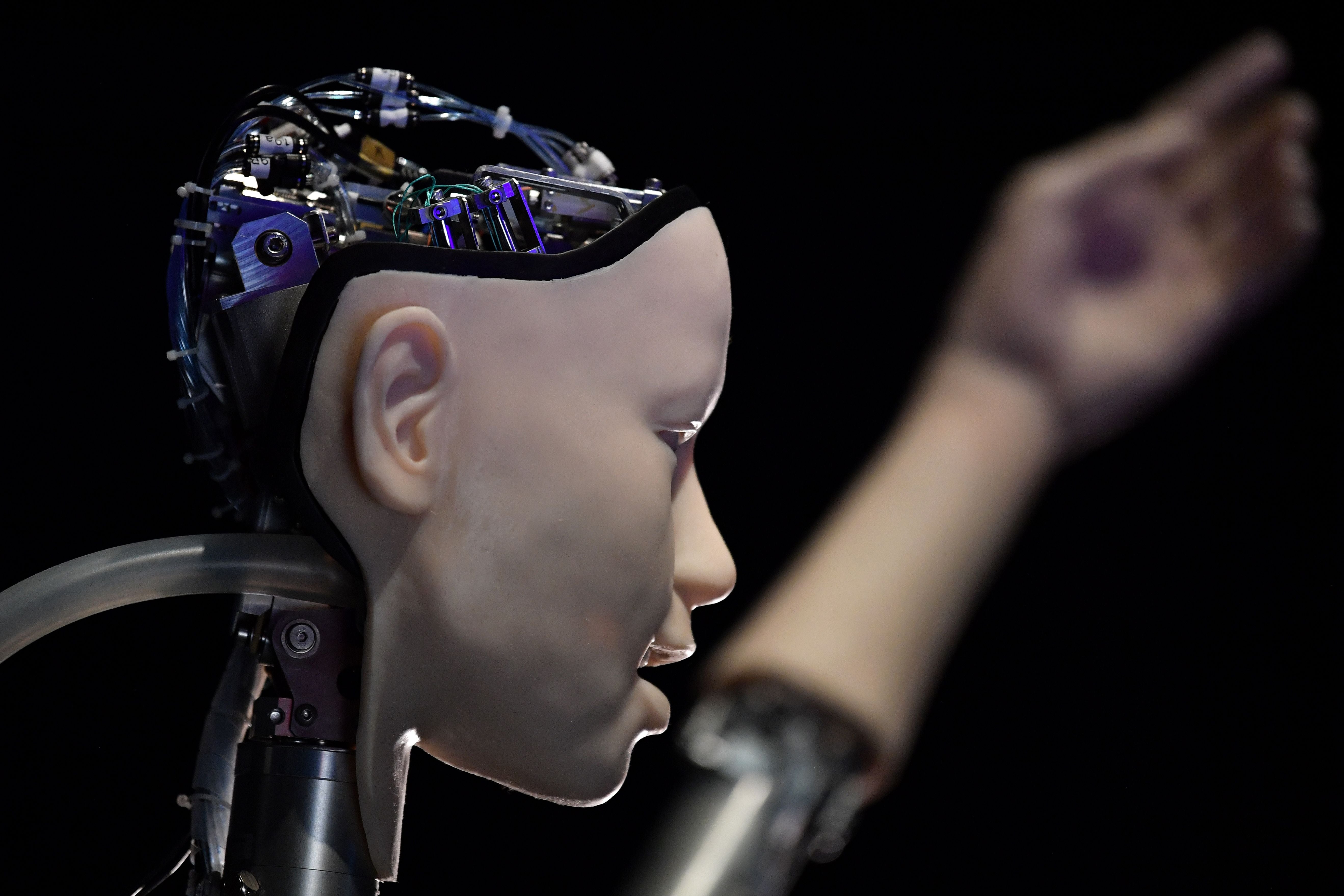

But let’s look still further ahead. What if a machine developed a mind of its own? Would it stay docile or go rogue? Futuristic books by Bostrom and Tegmark portray a dark side – where AI gets out of its box, infiltrates the internet of things, and pursues goals misaligned with human interest – and treat humans as an encumbrance.

Some AI pundits take this seriously and think the field already needs guidelines – just as biotech does. But others, like Rodney Brooks (inventor of the Baxter robot), regard these concerns as premature and think it will be a long time before AI will worry us more than real stupidity.

Be that as it may, it’s likely that society will be transformed by autonomous robots, even though the jury’s out on whether they’ll be idiot savants or display superhuman capabilities – and whether we should worry more about breakdowns and bugs, or about being outsmarted,

The futurologist Ray Kurzweil, now working at Google, argues that once machines have surpassed human capabilities, they could themselves design and assemble a new generation of even more powerful ones – an intelligence explosion. He wrote a book called The Age of Spiritual Machines where he predicted that humans would transcend biology by merging with computers. In old-style spiritualist parlance, they would go over to the other side.

We then confront the classic philosophical problem of personal identity. Could your brain be downloaded into a machine? If so, in what sense would it still be you? Should you be relaxed about you original body then being destroyed? What would happen if several clones were made of you? These are ancient conundrums for philosophers, but practical ethicists may, one distant day, need to address them.

But Kurzweil is worried that his nirvana may not happen in his lifetime. So he wants his body frozen until it’s reached. A company in Arizona will freeze and store your body with liquid nitrogen, so that when immortality’s on offer you can be resurrected or your brain downloaded.

I'd rather end my days in an English churchyard than an American refrigerator

But of course research on ageing is being seriously prioritised. Will the benefits be incremental? Or is ageing a disease that can be cured? Dramatic life-extension would plainly be a real wild card in population projections, with huge social ramifications. But it may happen, along with human enhancement in other forms.

Indeed it’s at least, surely, on the cards that human beings may become malleable through the deployment of genetic modification and cyborg technologies. Moreover, this future evolution – a kind of secular intelligent design – would take only centuries in contrast to the thousands of centuries needed for Darwinian evolution.

This is a game changer. When we admire the literature and artefacts that have survived from antiquity we feel an affinity, across a time gulf of thousands of years, with those ancient artists and their civilisations. But we can have zero confidence that the dominant intelligences a few centuries hence will have any emotional resonance with us – even though they may have an algorithmic understanding of how we behaved.

Our world is so interconnected that a catastrophe couldn’t hit any region without its consequences cascading globally

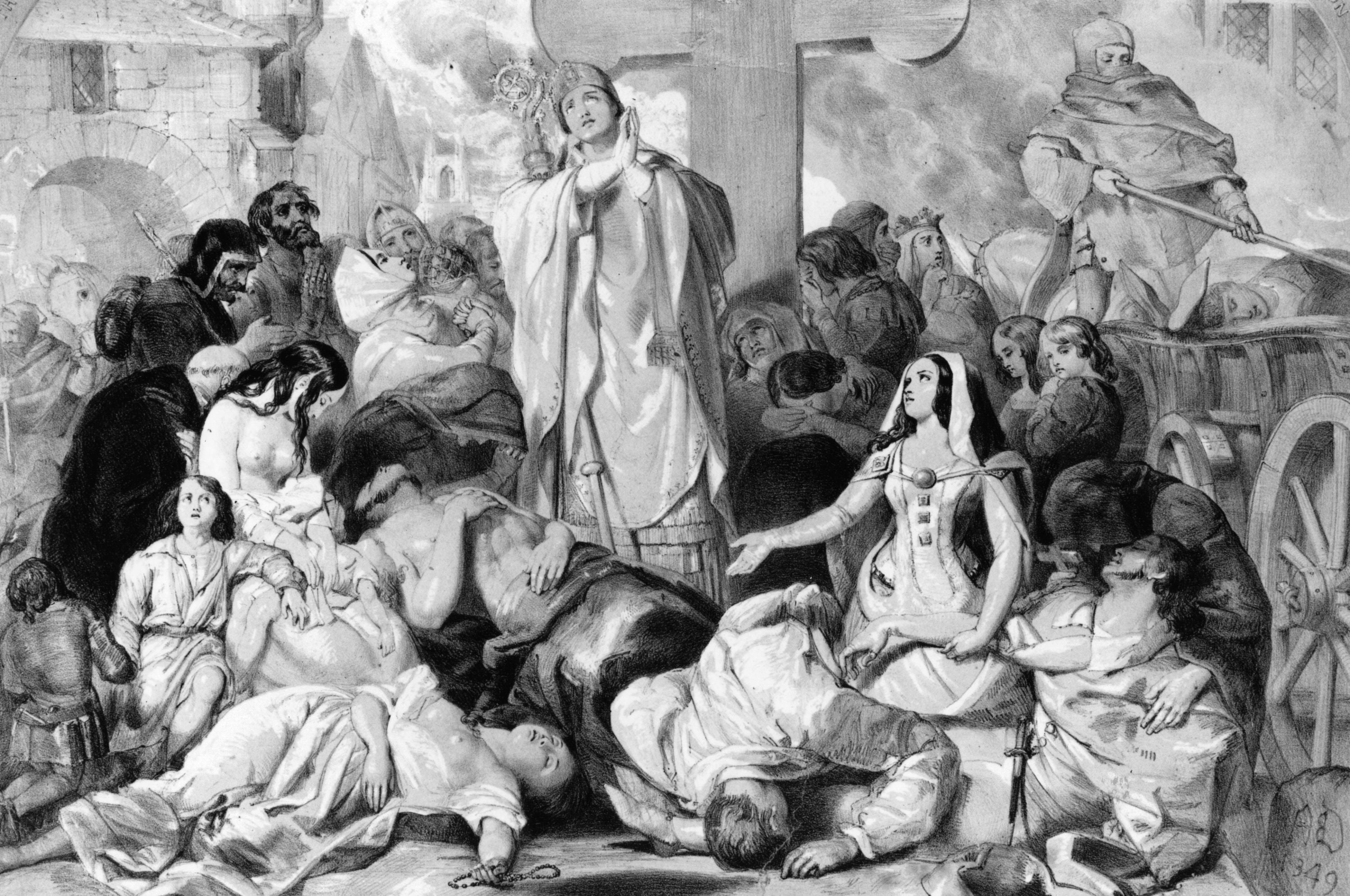

Back now to the nearer term: the historical record reveals episodes when civilisations have crumbled and even been extinguished. Famously, Jared Diamond – and now Luke Kemp and others – are cataloguing such events.

But the difference now is that our world is so interconnected that a catastrophe couldn’t hit any region without its consequences cascading globally. That’s why we need to contemplate a collapse – societal or ecological – that would be a truly global setback.

The setback could be temporary. On the other hand, it could cascade and spread so devastatingly (and entail so much environmental or genetic degradation) that the survivors could never regenerate a civilisation at the present level.

But this prompts the question: could there be a separate class of extreme events that would be curtains for us all, catastrophes that could snuff out all humanity? This has been discussed in the context of biological or high energy physics experiments that create conditions that have never occurred naturally.

Can scientists credibly give assurances that their experiments will be safe? We may offer huge odds against the sun not rising tomorrow, or against a fair die giving 100 sixes in a row, because we’re confident that we understand these things. But if our understanding is shaky, as it plainly is at the scientific frontiers, we can’t really assign a probability.

Biologists should avoid creation of potentially devastating genetically modified pathogens, or large-scale modification of the human germ line. Cyber experts are aware of the risk of a cascading breakdown in global infrastructure. Innovators who are furthering the beneficent uses of advanced AI should avoid scenarios where a machine takes over. Many of us are inclined to dismiss these risks as science fiction, but given the stakes they should not be ignored, even if deemed highly improbable.

On the other hand, innovations have an upside too. Application of the “precautionary principle” has an opportunity cost— “the hidden cost of saying no”.

And, by the way, the priority we should accord to truly existential disasters depends on an ethical question that has been discussed by the philosopher Derek Parfit: the rights of those who aren’t yet born.

Consider two scenarios: scenario A wipes out 90 per cent of humanity; scenario B wipes out 100 per cent. How much worse is B than A? Some would say 10 per cent worse: the body count is 10 per cent higher. But Parfit argued that B might be incomparably worse because human extinction forecloses the existence of billions, even trillions, of future people – and indeed an open-ended post-human future spreading far beyond the Earth.

The scenarios I’ve described – environmental degradation, unchecked climate change and unintended consequences of advanced technology – could trigger serious, even catastrophic, setbacks to our society

Some philosophers criticise Parfit’s argument, denying that “possible people” should be weighted as much as actual ones (“We want to make more people happy, not to make more happy people”.) And even if one takes these naive utilitarian arguments seriously, one should note that if aliens already existed, terrestrial expansion, by squeezing their habitats, might make a net negative contribution to overall cosmic contentment!

However, aside from these intellectual games about possible people, the prospect of an end to the human story would sadden those of us now living. Most of us, aware of the heritage we’ve been left by past generations, would be depressed if we believed there would not be many generations to come.

I think it is worth considering such extreme scenarios as a thought experiment. We can’t rule out human-induced threats far worse than those on our current risk register. Indeed, we have zero grounds for confidence that we can survive the worst that future technologies could bring.

They’re a mega-version of the issues that arise in climate policy, where it is controversial how much weight we should give to those who will live a century from now. It also influences our attitude to global population trends.

But I’ll conclude by reverting to nearer-term concerns. Opinion polls show, unsurprisingly, that younger people who expect to survive most of the century, are more engaged and anxious about long-term global issues – and their activism gives ground for hope.

What should be our message to them?

It’s surely that there’s no scientific impediment to achieving a sustainable world, where all enjoy a lifestyle better than those in the west do today. We live under the shadow of new risks but these can be minimised by a culture of “responsible innovation”, especially in fields like biotech, advanced AI and geo-engineering. And by reprioritising the thrust of the world’s technological effort.

We can be technological optimists. But intractable politics and sociology engender pessimism. The scenarios I’ve described – environmental degradation, unchecked climate change, and unintended consequences of advanced technology – could trigger serious, even catastrophic, setbacks to our society.

And most of the challenges are global. Coping with Covid-19 is a global challenge. And the threats of potential shortages of food, water, and natural resources – and transitioning to low carbon energy – can’t be solved by each nation separately. Nor can the regulation of potentially threatening innovations, especially those spearheaded by globe-spanning commercial conglomerates. Indeed, a key issue is whether, in a new world order, nations need to give up more sovereignty to new organisations along the lines of the IAEA, and WHO, etc

Scientists have an obligation to promote beneficial applications of their work and warn against the downsides. Universities should offer their staff’s expertise, and their convening power, to assess which scary scenarios can be dismissed as science fiction and how best to avoid the serious ones. We in Cambridge have set up a centre to address just these issues.

Politicians focus on immediate threats such as Covid-19, but they won’t prioritise the global and long-term measures needed to deal with the threat to climate and biodiversity unless the press and their inboxes keep these high on their agenda.

Science advisors to government have limited influence except in emergencies. They must enhance their leverage by involvement with NGOs, via blogging and journalism, supporting charismatic individuals – be they David Attenborough, Bill Gates or Greta Thunberg – and the media to amplify their voice. Politicians will make wise long-term decisions but only if they feel they won’t lose votes.

I’ll end with another flashback – not just to the 1960s but right back to the middle ages. For medieval people, the entire cosmology spanned only a few thousand years. They were bewildered and helpless in the face of floods and pestilences— and prone to irrational dread. Large parts of the earth were terra incognita. But they built cathedrals – constructed with primitive technology by masons who knew they wouldn’t live to see them finished – vast and glorious buildings, that still inspire us centuries later.

Read More:

Our horizons, in space and time, are now vastly extended, as are our resources and knowledge. But we don’t plan centuries ahead. This seems a paradox. But there is a reason. Medieval lives played out against a backdrop that changed little from one generation to the next. They were confident that they’d have grandchildren who would appreciate the finished cathedral. But for us, unlike for them, the next century will be drastically different from the present. We can’t foresee it, so it’s harder to plan for it. There is now a huge disjunction between the ever-shortening timescales of social and technical change and the billion-year timespans of biology, geology, and cosmology.

“Space-ship Earth” is hurtling through the void. Its passengers are anxious and fractious. Their life-support system is vulnerable to disruption and break-downs. But there is too little planning – too little horizon-scanning.

We need to think globally, we need to think rationally, we need to think long term, empowered by 21st-century technology but guided by values that science alone can’t provide.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments

Bookmark popover

Removed from bookmarks